Exploring what California’s new AI law means for college campuses.

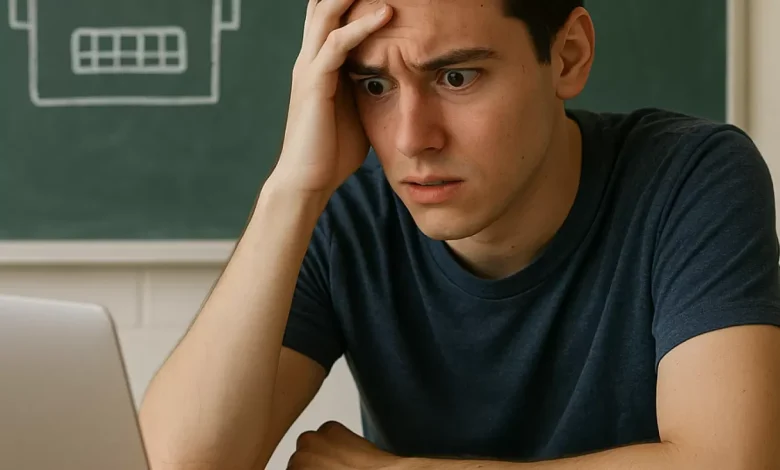

As artificial intelligence becomes woven into daily campus life, college students are navigating a new kind of pressure, one that blends academic stress with digital uncertainty. From essay-writing bots to emotional chat companions, AI is changing how young adults think, create, and cope.

Last month, California became the first state to pass the sweeping AI safety law known as SB 53, the Transparency in Frontier Artificial Intelligence Act. The legislation requires major AI developers to publish safety plans, report any harmful incidents within 15 days, and outline plans for preventing catastrophic misuse.

While the law is aimed at large tech companies, its ripple effects will reach classrooms, counseling centers, and college campuses across the country. Universities are among the fastest adopters of AI tools, both in academic settings and student life. Yet, unlike lab equipment or medication, there have been no standardized safety protocols for the psychological or cognitive effects of AI use among students.

This new wave of regulation invites higher education to think differently about what “AI safety” really means. It’s not just about preventing cyberattacks or misinformation: It’s also about protecting students’ attention, identity, and mental health in environments increasingly shaped by algorithms.

November 6th FDA Hearing on AI

The upcoming November 6, 2025 hearing by the FDA’s Digital Health Advisory Committee follows suit with the California law. It is expected to be a pivotal event for both current clinicians and college students, signaling a formal shift in how technology is integrated into mental healthcare. The committee will focus on regulating “generative AI-enabled digital mental health medical devices,” a discussion that will directly shape the future clinical toolkit for practitioners by defining new safety standards and raising critical questions about liability and patient care. This moves AI tools from the realm of simple wellness apps to regulated medical devices. For college students, who are a primary demographic for these digital tools, the hearing’s outcome will directly impact the safety and reliability of the mental health apps frequently used on campuses. Moreover, it offers a clear glimpse into a future where the ethical frameworks and skills needed to validate and collaborate with sophisticated AI systems will likely become a core professional responsibility. It will be streamed live from 9am to 6pm EDT here.

Why Faculty and Mental Health Professionals Should Care

College students are now the first generation to experience artificial intelligence as a constant presence in their learning and emotional lives. Many rely on AI to brainstorm papers, manage stress, or even provide companionship when they feel isolated. While these tools can be empowering, they also blur boundaries between human growth and machine dependence.

One student captured this tension perfectly, stating, “Honestly, it’s making me really anxious about finding a job. It feels like AI is already taking over the entry-level jobs that we’re supposed to get right after graduation.” Another worried about the cognitive toll: “I noticed that I started using AI for everything and it was making my brain used to not thinking. If you just let AI do everything, you won’t develop critical thinking skills.”

Faculty often see the academic side of this: Students turning in polished essays that feel detached from their own voices, or showing signs of burnout from constant digital multitasking. Counselors see the emotional side: Students reporting AI-induced anxiety and a sense of disconnection from their own creativity.

Dr. Jessica Kizoreck sees this daily as a professor who specializes in AI anxiety. “Students are caught in a double bind. They feel anxious if they don’t use AI because they fear falling behind their peers. But they also feel anxious when they do use it, as it leads them to question their own abilities and originality. This cycle is a direct path to feelings of inadequacy and burnout.”

The new California law offers a moment for reflection: If policymakers are building guardrails for the nation’s most powerful AI systems, colleges and universities should be doing the same for their students. That means teaching AI literacy as emotional literacy, guiding students to use these tools with intention, and helping them understand the psychological trade-offs of outsourcing too much thinking—or feeling—to technology.

Artificial Intelligence Essential Reads

Psychological Safety as the New Frontier of AI Ethics

In technology circles, “AI safety” usually means preventing catastrophic risks like misinformation, data breaches, or autonomous harm. But in higher education, the quieter dangers are psychological. Students describe feeling overstimulated, disconnected, or creatively “numb” after relying too heavily on AI. Others struggle with anxiety about whether their ideas are truly their own.

These are not technical failures…They’re human ones. And they call for a different kind of oversight: What psychologists might call psychological safety. On campus, this means helping students maintain a healthy sense of agency, curiosity, and self-efficacy while engaging with powerful new tools. It’s about teaching students to stay human while learning with machines.

Dr. Otis Kopp, a professor at Florida International University whose research includes technology adoption, believes students are facing a new authenticity crisis. “In a world where personal branding is everything, students face the challenge of building an authentic identity when their creative output is blended with AI,” he notes. “It creates a subtle but persistent imposter syndrome, leaving them to constantly ask themselves, ‘Is this really my idea, or am I just a prompt engineer for a machine?’”

Faculty and clinicians can model this balance by treating AI as a supplement, not a substitute for reflection, creativity, and authentic learning. Courses that integrate AI should also encourage digital rest, journaling, and mindful use. Counseling centers can normalize conversations about AI anxiety and identity confusion, helping students process the emotional side of technological change.

In the long run, creating a culture of psychological safety around AI will matter just as much as coding technical safeguards. The real test of responsible innovation isn’t just whether the machines behave, but whether the humans using them stay whole.