NeurIPS 2025 Best Papers in Comics

Last week the NeurIPS 2025 Best Paper Awards were announced.

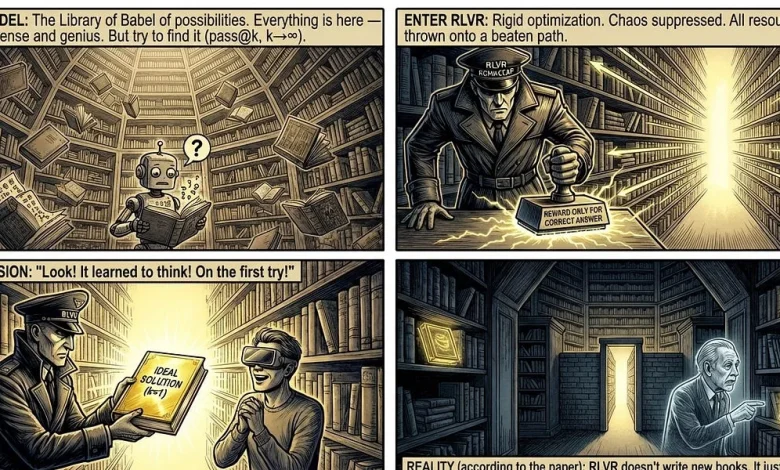

As I did with the ICML 2025 Outstanding Papers, I ran my automated paper review system to create summaries for all the award-winning and runner-up papers. This time I also generated comics to explain a paper in one image.

Here are the results.

Authors: Liwei Jiang, Yuanjun Chai, Margaret Li, Mickel Liu, Raymond Fok, Nouha Dziri, Yulia Tsvetkov, Maarten Sap, Yejin Choi

Paper: https://arxiv.org/abs/2510.22954, NeurIPS submission

Code: https://github.com/liweijiang/artificial-hivemind

Datasets: HF Collection

WHAT was done? The authors introduce INFINITY-CHAT, a dataset of 26K real-world open-ended queries, to systematically evaluate output diversity across 70+ state-of-the-art LLMs. They identify a pervasive “Artificial Hivemind” phenomenon where models exhibit extreme mode collapse—both repeatedly generating the same outputs internally (intra-model) and converging on strikingly similar responses across different model families (inter-model).

WHY it matters? This invalidates the common assumption that increasing temperature or using model ensembles guarantees diversity. The study reveals that modern RLHF and instruction tuning have homogenized the “creative” latent space of models to such a degree that distinct models (e.g., DeepSeek and GPT-4) act as near-identical clones on open-ended tasks. Furthermore, it demonstrates that current Reward Models are poorly calibrated to diverse human preferences (pluralism), failing to score valid but idiosyncratic responses correctly.

Link to the full review.

Authors: Zihan Qiu, Zekun Wang, Bo Zheng, Zeyu Huang, Kaiyue Wen, Songlin Yang, Rui Men, Le Yu, Fei Huang, Suozhi Huang, Dayiheng Liu, Jingren Zhou, Junyang Lin (Qwen Team)

Paper: https://arxiv.org/abs/2505.06708, NeurIPS submission

Code: https://github.com/qiuzh20/gated_attention

Model: https://huggingface.co/collections/Qwen/qwen3-next

WHAT was done? The authors introduce Gated Attention, a mechanism that applies a learnable, input-dependent sigmoid gate immediately after the Scaled Dot-Product Attention (SDPA) output. By modulating the attention output Y with a gate σ(XWθ), the method introduces element-wise sparsity and non-linearity before the final output projection.

WHY it matters? This simple architectural modification yields profound stability improvements for large-scale training (eliminating loss spikes) and consistently improves perplexity on 15B MoE and 1.7B dense models. Crucially, it mechanistically eliminates the “Attention Sink” phenomenon and “Massive Activations” without requiring heuristic fixes like “sink tokens,” thereby significantly improving long-context extrapolation.

Link to the full review.

Authors: Kevin Wang, Ishaan Javali, Michał Bortkiewicz, Tomasz Trzciński, Benjamin Eysenbach

Paper: https://openreview.net/forum?id=s0JVsx3bx1

Project page: https://wang-kevin3290.github.io/scaling-crl/

WHAT was done? The authors successfully scaled Reinforcement Learning (RL) policies from the standard 2-5 layers to over 1,000 layers by utilizing Self-Supervised Learning (specifically Contrastive RL) combined with modern architectural choices like Residual connections, LayerNorm, and Swish activations.

WHY it matters? This challenges the prevailing dogma that RL does not benefit from depth. While standard algorithms like SAC saturate or collapse with deeper networks, this work shows that Contrastive RL allows for continued performance scaling (20x–50x gains), enabling agents to solve long-horizon humanoid mazes and develop emergent locomotor skills without explicit reward engineering.

Link to the full review.

Authors: Tony Bonnaire, Raphaël Urfin, Giulio Biroli, Marc Mézard

Paper: https://arxiv.org/abs/2505.17638, NeurIPS submission

Code: https://github.com/tbonnair/Why-Diffusion-Models-Don-t-Memorize

WHAT was done? The authors provide a theoretical and empirical analysis characterizing the training dynamics of score-based diffusion models. Recognizing that models can eventually overfit, they identify two distinct timescales: τgen, when the model learns to generate valid samples, and τmem, when it begins to memorize specific training instances. This work was awarded a Best Paper Award at NeurIPS 2025.

WHY it matters? This work resolves the paradox of why overparameterized diffusion models generalize despite having the capacity to perfectly memorize training data. By proving that τmem scales linearly with the dataset size n while τgen remains constant, the paper establishes that “early stopping” is not just a heuristic, but a structural necessity driven by Implicit Dynamical Regularization. This explains why larger datasets widen the safety window for training, allowing massive models to generalize robustly.

Link to the full review.

Authors: Yang Yue, Zhiqi Chen, Rui Lu, Andrew Zhao, Zhaokai Wang, Yang Yue, Shiji Song, Gao Huang

Paper: https://arxiv.org/abs/2504.13837, NeurIPS submission

Code: https://limit-of-rlvr.github.io

WHAT was done? In this NeurIPS 2025 Best Paper Runner-Up, the authors systematically probed the reasoning boundaries of Large Language Models (LLMs) trained via Reinforcement Learning with Verifiable Rewards (RLVR). Using the unbiased pass@k metric across mathematics, coding, and visual reasoning tasks, they compared base models against their RL-tuned counterparts to determine if RLVR generates novel reasoning patterns or merely amplifies existing ones.

WHY it matters? The findings challenge the prevailing narrative that RLVR allows models to autonomously discover “superhuman” strategies similar to AlphaGo. The study reveals that while RLVR significantly improves sampling efficiency (correct answers appear more often), it does not expand the model’s fundamental reasoning capability boundary. In fact, for large k, base models often solve more unique problems than their RL-trained versions, suggesting that current RL methods are bounded by the priors of the pre-trained model.

Link to the full review.

Authors: Zachary Chase, Steve Hanneke, Shay Moran, Jonathan Shafer

Paper: https://openreview.net/forum?id=EoebmBe9fG

WHAT was done? The authors resolved a 30-year-old open problem in learning theory by establishing tight mistake bounds for Transductive Online Learning. Recognized as a Best Paper Runner-Up at NeurIPS 2025, they proved that for a hypothesis class with Littlestone dimension d, the optimal mistake bound is Θ(sqrt(d)).

WHY it matters? This result quantifies exactly how much “looking ahead” helps. It proves that having access to the unlabeled sequence of future test points allows for a quadratic reduction in mistakes compared to the standard online setting (where the bound is d). This closes a massive exponential gap between the previous best known lower bound of Ω(logd) and upper bound of O(d).

Link to the full review.

Authors: Yizhou Liu, Ziming Liu, and Jeff Gore

Paper: https://arxiv.org/abs/2505.10465, NeurIPS submission

Code: https://github.com/liuyz0/SuperpositionScaling

WHAT was done? The authors propose a mechanistic explanation for neural scaling laws by linking them to representation superposition. By adapting a sparse autoencoder framework and validating on open-source LLMs (OPT, Pythia, Qwen), they demonstrate that when models operate in a “strong superposition” regime—representing significantly more features than they have dimensions—the loss scales inversely with model width (L∝1/m). This scaling is driven by the geometric interference between feature vectors rather than the statistical properties of the data tail.

WHY it matters? This work, a NeurIPS 2025 Best Paper Runner-Up, provides a first-principles derivation of scaling laws that is robust to data distribution. Unlike previous theories relying on manifold approximation, this research suggests that the “power law” behavior of LLMs is a geometric inevitability of compressing sparse concepts into dense spaces. It implies that overcoming these scaling barriers requires architectural interventions to manage feature interference, as simply adding more data cannot bypass the geometric bottleneck.

Link to the full review.

I hope you find this funny and useful 😁