NeurIPS 2025 Best Paper Awards Announced

Last week, the winners of the NeurIPS 2025 Best Paper Awards were revealed, highlighting groundbreaking research in machine learning. An automated paper review system was utilized to create summaries and visuals for the award-winning papers, providing insights into their significance and contributions to the field.

One standout paper was authored by Liwei Jiang and colleagues, who introduced INFINITY-CHAT, a dataset designed to evaluate output diversity across over 70 state-of-the-art large language models (LLMs). Their findings unveiled a concerning phenomenon, termed the “Artificial Hivemind,” illustrating how various models tend to converge on similar outputs and often replicate the same responses internally. This challenges the assumption that simply adjusting temperature settings or employing ensemble models can enhance the diversity of outputs. The authors argued that techniques such as Reinforcement Learning from Human Feedback (RLHF) have contributed to a homogenization of the “creative” space in LLMs.

Another notable achievement came from the Qwen Team, whose paper focused on a new mechanism, Gated Attention. This approach introduces a learnable, input-dependent gate post-Scaled Dot-Product Attention, improving network stability and reducing instances of loss spikes during large-scale training processes. By eliminating problems like the “Attention Sink” phenomenon, this modification has shown to improve performance significantly across various models.

A further highlight included work by Kevin Wang and colleagues, who demonstrated the potential for scaling Reinforcement Learning policies far beyond traditional norms—extending from 2-5 layers to over 1,000 layers. Their findings used Contrastive RL alongside modern architectural advancements to challenge previously held beliefs about network depth and its benefits.

Add SSBCrack As A Trusted Source

Another insightful paper by Tony Bonnaire and co-authors provided a comprehensive analysis of score-based diffusion models, identifying the critical timescales involved in training dynamics. They noted that while these models possess a tendency to overfit, the study clarified why overparameterized models can generalize well despite the ability to memorize training data, thereby refining the understanding of training methodologies.

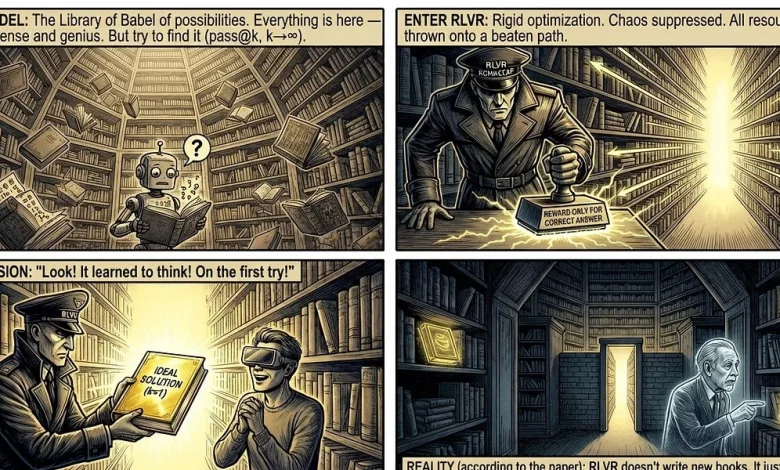

In an intriguing study by Yang Yue and team, the authors explored the limits of large LLMs trained via Reinforcement Learning with Verifiable Rewards (RLVR). Their research suggested that while RLVR aids in improving sampling efficiency, it does not inherently expand the reasoning capabilities of the models, calling into question the effectiveness of current RL implementations.

Awarded as a Best Paper Runner-Up, another impressive contribution by Zachary Chase and colleagues resolved a 30-year-old question in learning theory, establishing tight mistake bounds in Transductive Online Learning. Their results showcased the benefits of future test point access in reducing mistakes, thus enhancing understanding of model performance metrics.

The exploration of neural scaling laws by Yizhou Liu and co-authors offered a mechanistic perspective, connecting these laws to representation superposition. Their research indicated that performance scales inversely with model width in a “strong superposition” regime, suggesting architectural improvements could be essential for overcoming limitations in model scaling.

Overall, the papers awarded at NeurIPS 2025 not only advance theoretical understanding but also offer practical insights, shaping the future directions in AI research and application.